With the aid of open-source tools, Internet researcher Lawrence Alexander gathered and visualised data on nearly 20,500 pro-Kremlin Twitter accounts, revealing the massive scale of information manipulation attempts on the RuNet. In what is the first part of a two-part analysis, he explains how he did it and what he found.

RuNet Echo has previously written about the efforts of the Russian “Troll Army” to inject the social networks and online media websites with pro-Kremlin rhetoric. Twitter is no exception, and multiple users have observed Twitter accounts tweeting similar statements during and around key breaking news and events. Increasingly active throughout Russia’s interventions in Ukraine, these “bots” have been designed to look like real Twitter users, complete with avatars.

But the evidence in this two-part analysis points to their role in an extensive program of disinformation.

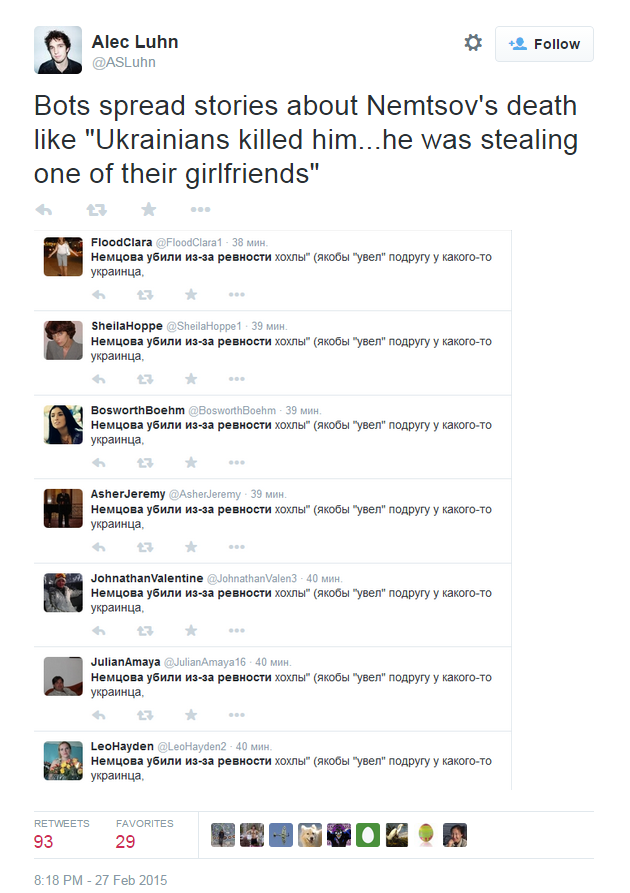

Alec Luhn, a US journalist reporting on Russian affairs, observed how mere hours after the shooting of Boris Nemtsov on February 27th, a group of Twitter accounts were already attempting to sway the narrative:

Using the open-source NodeXL tool, I collected and imported a complete list of accounts tweeting that exact phrase into a spreadsheet. From that list, I also gathered and imported an extended community of Twitter users, comprised of the friends and followers of each account. It was going to be an interesting test: if the slurs against Nemtsov were just a minor case of rumour-spreading, they probably wouldn’t be coming from more than a few dozen users.

But once the software had finished crunching data, the full scale of the network was revealed: a staggering 2,900 accounts. This figure is perhaps understandable: for a fake Twitter account to be credible, it needs plenty of followers—which in turn requires more supporting bots.

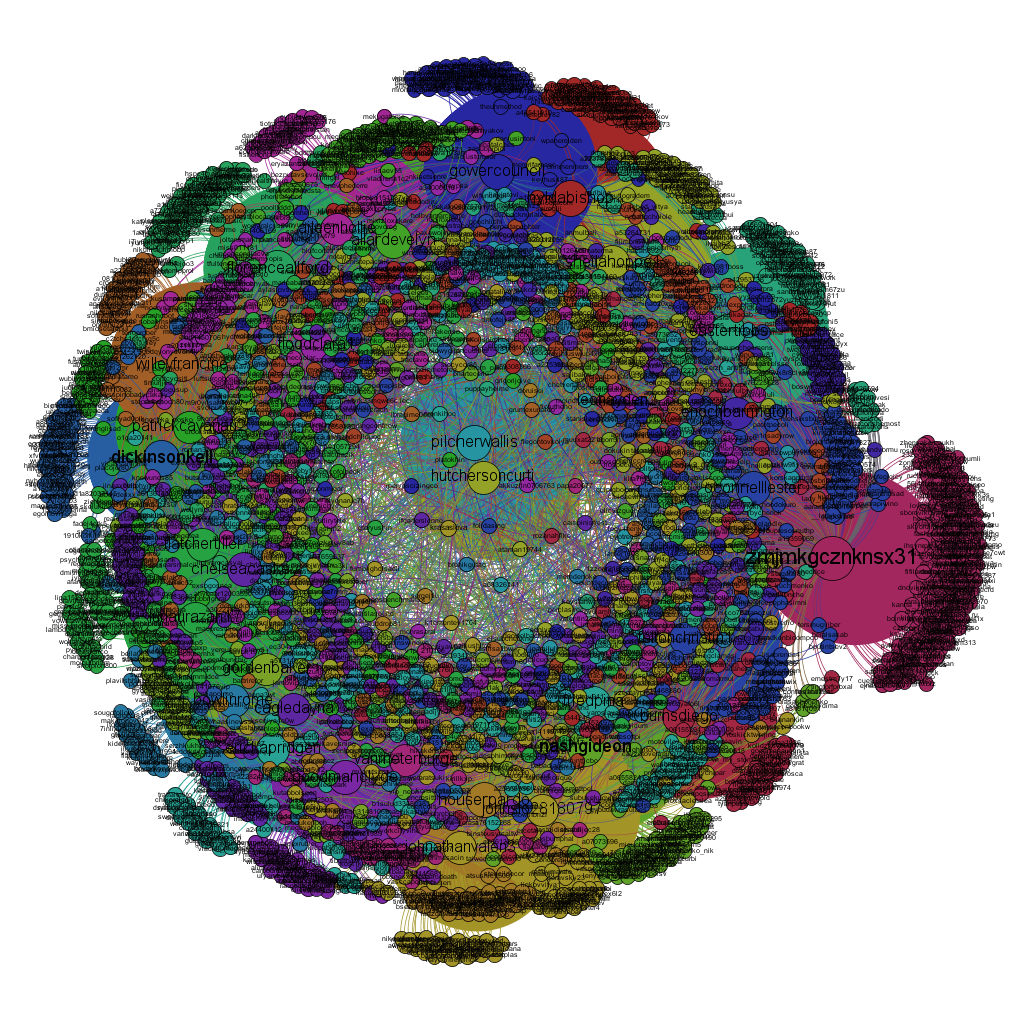

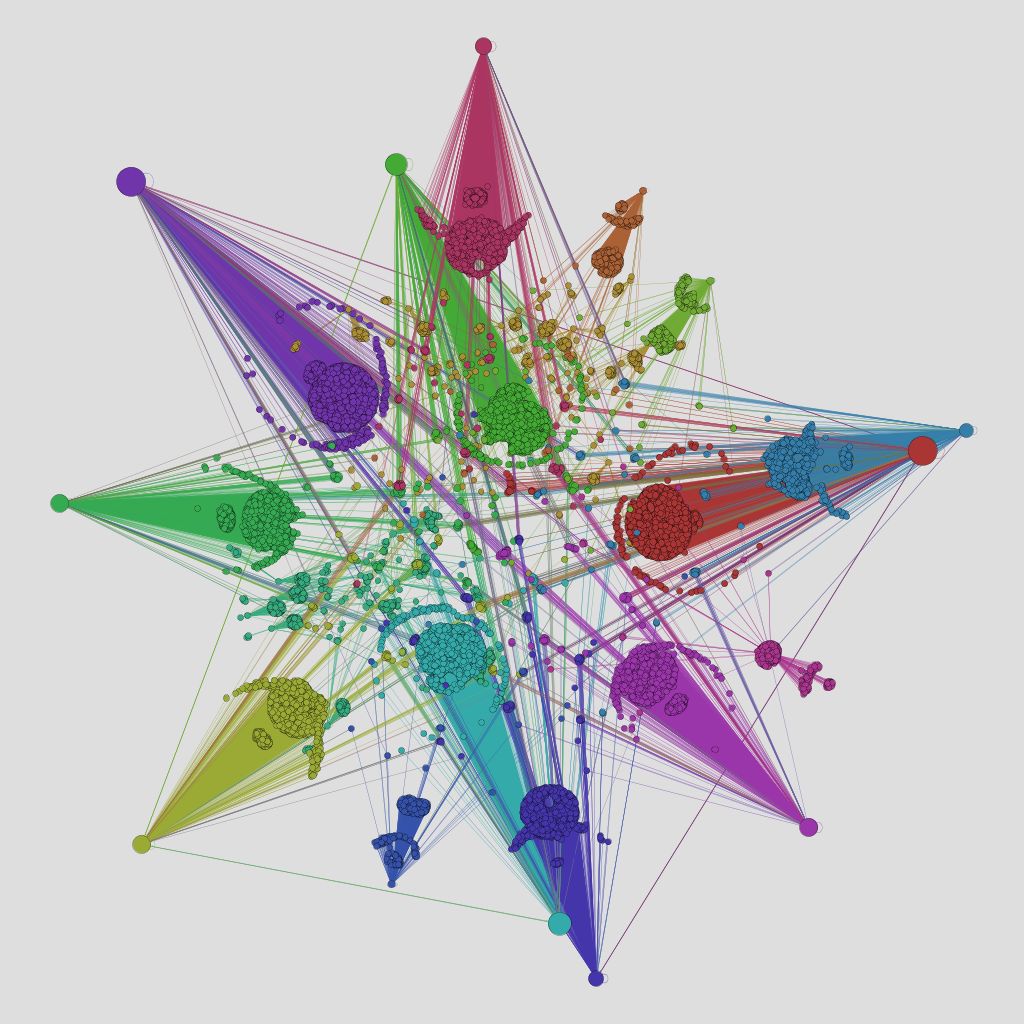

Then I used Gephi, another free data analysis tool, to visualize the data as an entity-relationship graph. The coloured circles—called Nodes—represent Twitter accounts, and the intersecting lines—known as Edges—refer to Follow/Follower connections between accounts. The accounts are grouped into colour-coded community clusters based on the Modularity algorithm, which detects tightly interconnected groups. The size of each node is based on the number of connections that account has with others in the network.

Extended network of 2,900 Twitter bots taken from Nemtsov thread sample. Image by Lawrence Alexander.

It is clear from how dense and close to each other the nodes are in the graph that this is a large and highly-connected network. Most of the bots follow many others, giving them each a high follower/followed count. On the periphery, there are a few rings of lesser-connected accounts, perhaps indicating that the “bot” network was still being “grown” at the time of its capture; you could think of it like a tree, with branches spreading outwards.

But there was one crucial question in this analysis: how was it possible to be sure that the network consisted chiefly of bots and not real humans?

NodeXL doesn’t just gather information on who follows who. It also acquires metadata—the publicly-available details of each Twitter account and its behavior. This shows that out of the 2,900-strong network, 87% of profiles had no timezone information and 92% no Twitter favorites. But in a randomized sample of 11,282 average Twitter users (based on accounts that had tweeted the word “and”) only 51% had no timezone and tellingly, only 15% had no favorites (both traits of what could be classified as “human” behavior).

For added comparison, an entity-relationship graph of the randomized Twitter user control network is shown below. In contrast with the bots visualisation, this network has several unconnected and isolated clusters: groups of Twitter users that aren’t linked to each other—a perfectly normal occurrence in a random group of users.

Having unearthed such a large-scale network from a single source, I decided to take the bot-hunting further. Searching Twitter for phrases such as “Kremlin bots”, “pro-Russian trolls” and “Putin sockpuppets,” I found several users sharing screenshots of alleged bot activity. Some also used the #Kremlinbots (#Кремлеботы) hashtag to report sightings.

Using the same method as with the anti-Nemtsov tweets, I gathered networks of accounts based around the use of some of the reported key phrases that revealed larger communities—or, in some cases, just a list of users shown in the screenshot. These were divided into groups labelled A, B, C and D. (I will elaborate on the reason for this grouping in part two of the analysis.)

@PressRuissa is a parody account (now suspended) spoofing a pro-Russian media, a mix of satire and commentary on disinformation and bias. One of its tweets was the starting source for the Group A network:

The Russian tweeters reveal that “Novaya Gazeta covers up their bots’ activity” in a surprising unison. (via @nokato)pic.twitter.com/DSwcIKWpDg)

— Falcon News Intl. (@PressRuissa) March 13, 2015

Group B came from different sources of suspected bot accounts whose behavior seemed to match the previous samples. Some were identified by their tendency to change from Russian language to English with the single error message “RSS in offline mode”—presumably caused by a glitch in their control software.

RSS in offline mode

— Леонид Верхратский (@GCL2BUugsq4n5JL) April 1, 2015

For the remaining sources, a tweet by Devin Ackles, an analyst for think tank CASE Ukraine provided the basis for Group C.

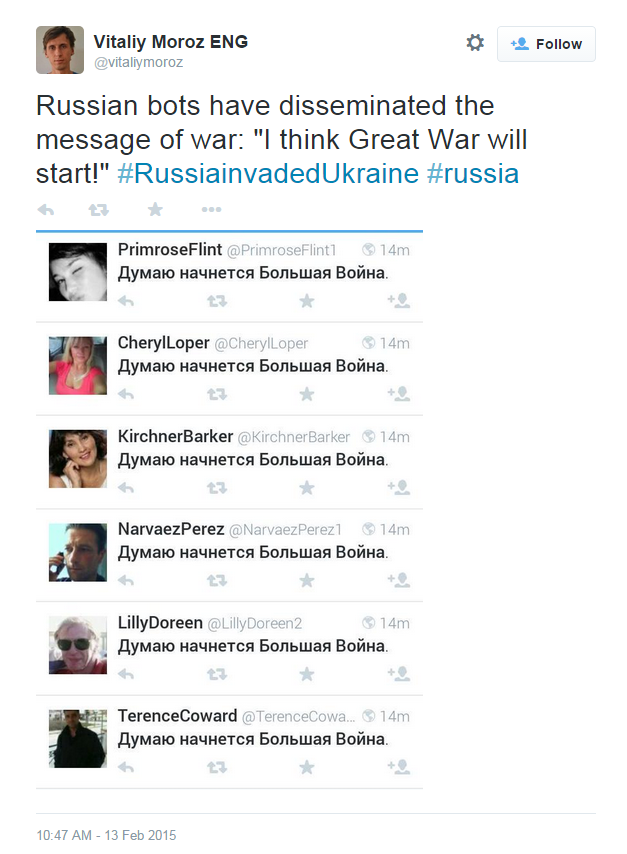

And lastly, Vitaliy Moroz of Internews Ukraine shared a screencap of bot accounts that formed the sample for Group D.

All four groups were merged into a single data set, resulting in a total of 17,590 Twitter accounts. As with those producing the anti-Nemtsov tweets, the metadata confirmed that the vast majority were indeed bots. 93% showed no location on their profile, 96% had no time zone information and 97% had no Twitter favorites saved.

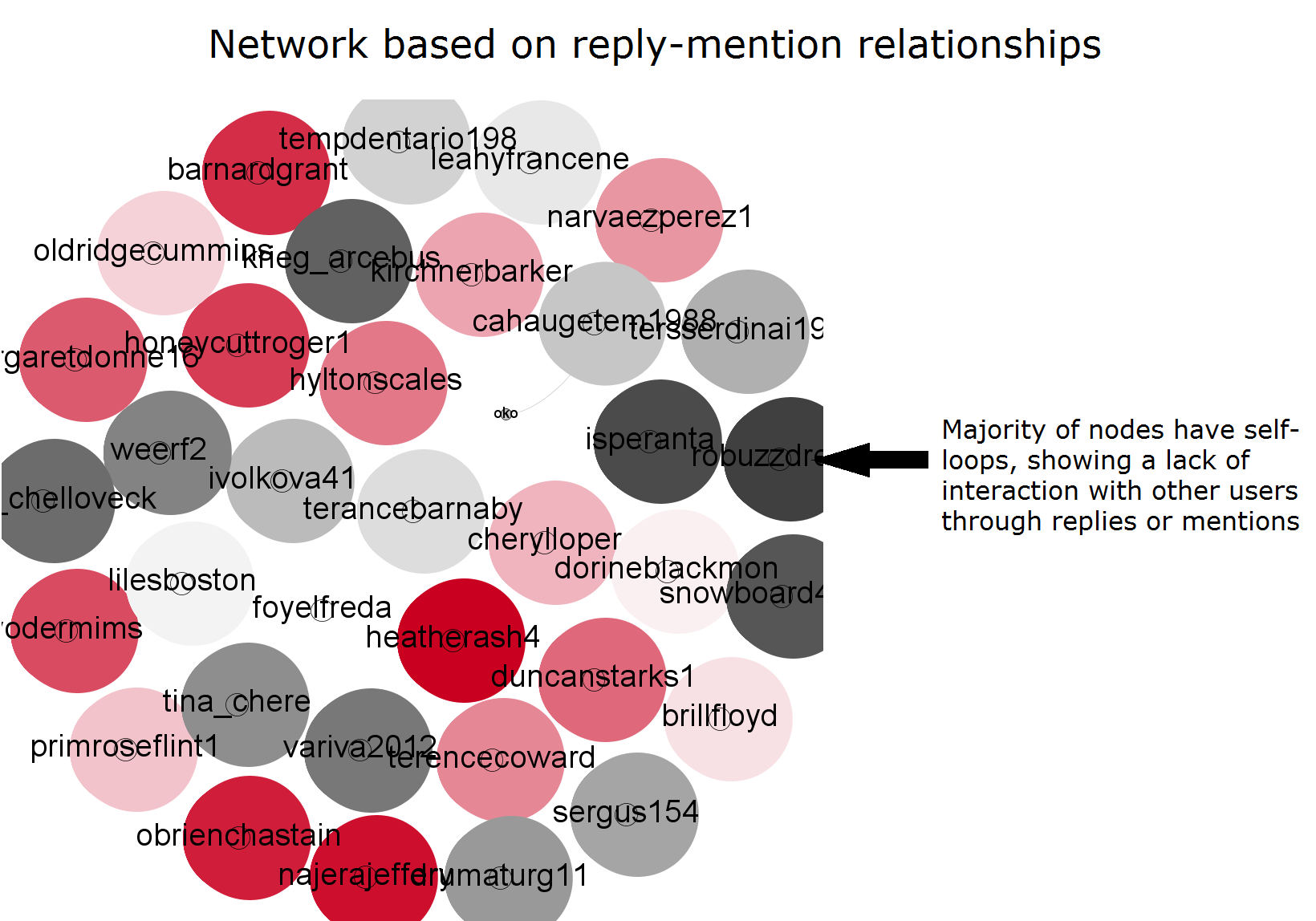

Also, despite having produced an average of 2,830 tweets, the accounts almost never interacted with other Twitter users through @replies or @mentions.

Intriguingly, many of the bots had been given western-sounding names such asbarnardgrant, terancebarnaby, terencecoward and duncanstarks.

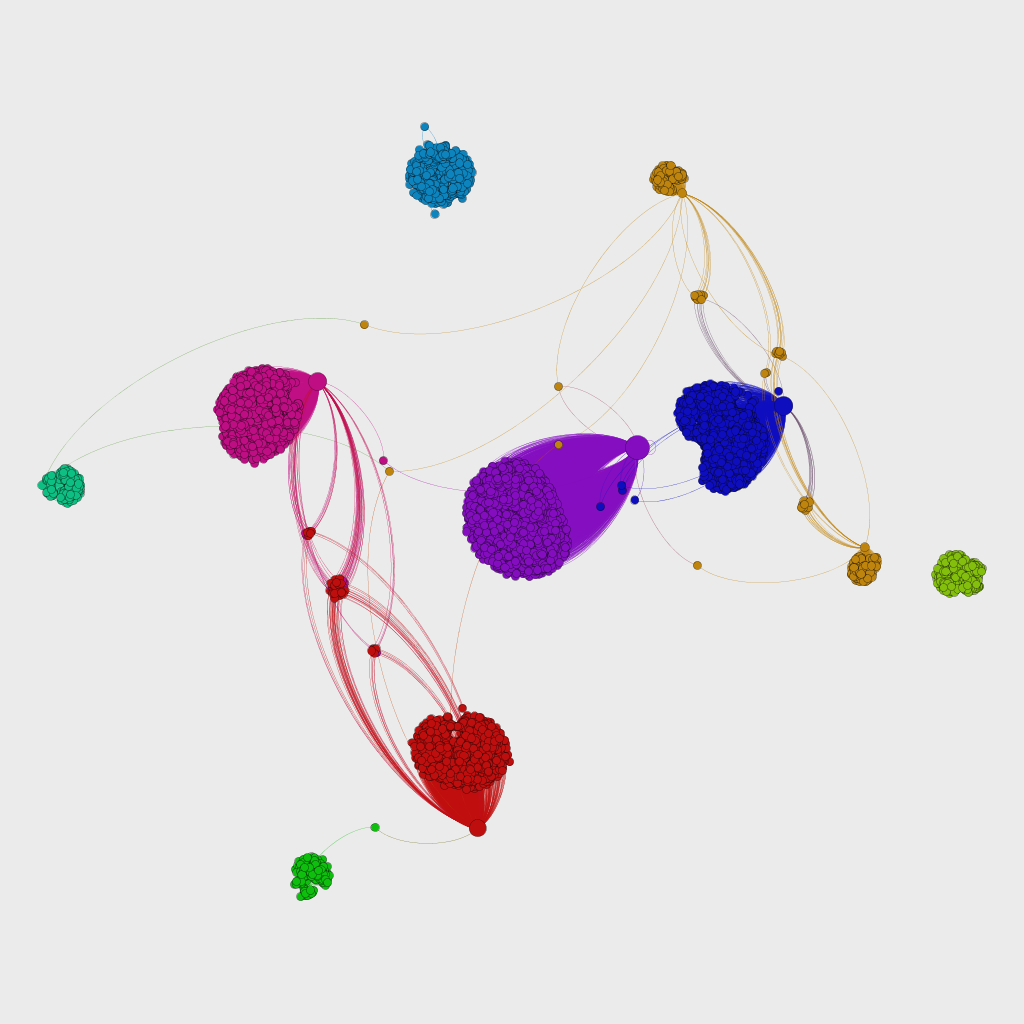

But an even more surprising result came when I visualized the bot groups’ follow relationships. Even though the samples had been taken from four separate sources, the combined network was found to be intensely interconnected.

This sharply contrasts with the randomized control sample: the final dataset showed no isolated groups or outliers at all. This strongly supports the idea that the bots were created by a common agency—and the weight of evidence points firmly towards Moscow.

In my next post I will look at the timeline behind the creation of the bots, and see how it correlates with political events in Russia and Ukraine.