Between May and July 2019, bots accounted for a staggering 55% of all Russian-language Twitter messages about the NATO presence in the Baltic States and Poland, a study by the NATO Stratcom Centre of Excellence reveals.

This represents an increase in bot activity from the second quarter of this year, when 47% of Russian-language Twitter messages about the NATO presence came from bots.

What are they tweeting about?

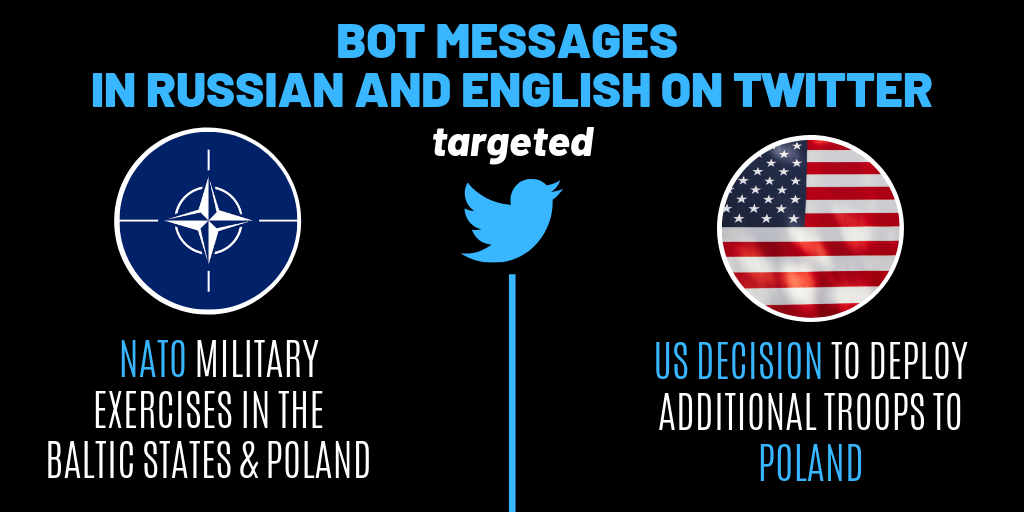

The Russian-language bots were particularly interested in NATO military exercises that took place in the Baltic States and Poland during this period.

In fact, according to the NATO Stratcom Centre of Excellence, which has studied robotic activity for the past three years, there is a distinct pattern: whenever a military exercise takes place, coverage by pro-Kremlin disinformation outlets is systematically amplified by inauthentic accounts.

The disinformation network is activated to highlight stories by Sputnik and push the broader narratives, alleging that NATO seeks to increase confrontation with Russia, uses false pretexts to encircle Russia, and threatens the global security (also see here). At the same time, countries hosting international military exercises are portrayed as militant and destitute.

Meanwhile, English-language bots, though less active than at the beginning of the year, were nonetheless actively amplifying pro-Kremlin disinformation messages about the US decision to deploy additional troops to Poland. This decision, according to the pro-Kremlin media, is a provocation against Russia and a violation of earlier treaties (in fact, it is neither).

What’s new in the bot-world?

The long-term study of bot activity also revealed sharp changes in bot tactics on Twitter. As the platform aims to prevent inauthentic activity, malicious actors adapt their behaviour in order to avoid detection.

In 2017, the majority of bots were the so-called “post-bots”, sharing plain text messages with news headlines or links. In just one year, the proportion of this type of bots declined, though not as considerably as one might expect, given how primitive they are. Today they still they make up over 25 percent of Russian-language Twitter bots.

In the past two years, there has also been an increase of Russian “news-bots”. This type of automatic posting is actually used by many legitimate news outlets whose accounts cross-post content from their websites. But in the disinformation ecosystem, “news-bots” are used to spread content from fringe or fake-news websites. The increase of this type of bot is likely connected to the fact that accounts pretending to be news outlets are less likely to be removed from the platform.

From summer 2017 onwards, the NATO CoE also observed the emergence of “mention-trolls”: fake accounts that only tweet at other users. Their objective is to flood journalists’ and institutions’ accounts with fake feedback, rendering any kind of meaningful discussion impossible.

As the dynamics of coordinated inauthentic behaviour online are shifting, it is important as ever to strengthen our abilities to spot it. Here’s how:

What is the best way to keep your Facebook/Instagram/Twitter clean? Here are some tips! pic.twitter.com/FBuMJXojTO

— EU Mythbusters (@EUvsDisinfo) December 16, 2018