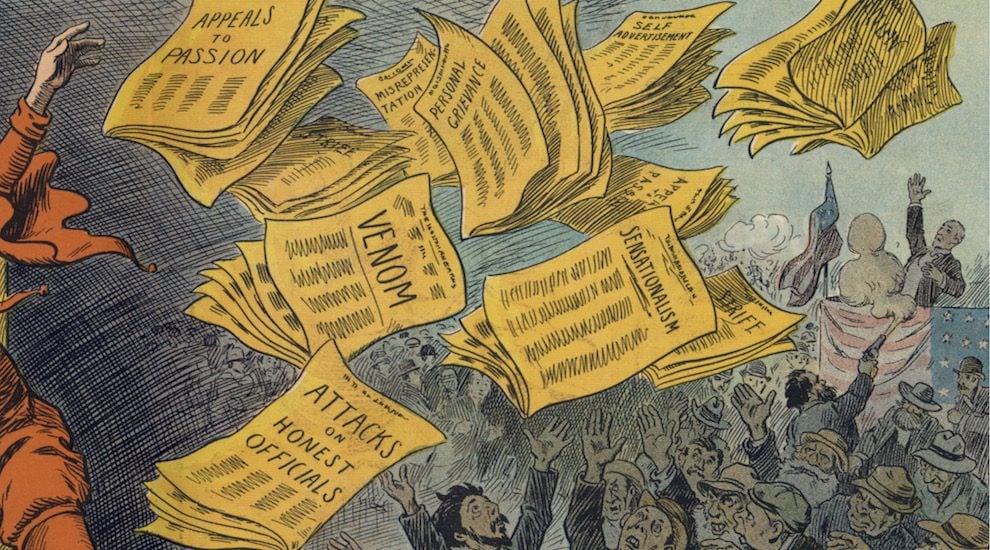

Plus: Does all our yammering about fake news make people think real news is fake?

By Shan Wang, for NiemanLab

The growing stream of reporting on and data about fake news, misinformation, partisan content, and news literacy is hard to keep up with. This weekly roundup offers the highlights of what you might have missed.

Study says heavy Facebook use is linked to more hate crimes against asylum seekers in Germany. Wait, is that what it says? The New York Times on Monday published a story, datelined from a “pro-refugee” German town, exploring the terrifying trajectory of actual German Facebook superusers who become radicalized through their intense activity in anti-refugee bubbles on social media, and commit real-life acts of violence. The piece, by Amanda Taub and Max Fisher of the Interpreter column, leaned on a previously covered working paper from researchers at the University of Warwick, and described the paper’s key finding as follows:

Towns where Facebook use was higher than average, like Altena, reliably experienced more attacks on refugees. That held true in virtually any sort of community — big city or small town; affluent or struggling; liberal haven or far-right stronghold — suggesting that the link applies universally.

Their reams of data converged on a breathtaking statistic: Wherever per-person Facebook use rose to one standard deviation above the national average, attacks on refugees increased by about 50 percent.

Shortly thereafter, academics began tussling over both the study’s quality and how it was portrayed in the Times piece.

Without access to comprehensive user behavior data from Facebook, the researchers chose what they considered next best approximations. They looked at, for instance, 39,632 posters on the public Facebook page for the newish right-wing party Alternative für Deutschland as a proxy for how much towns were exposed to nationwide anti-refugee content on social media. They looked at 21,915 Facebook users on the Nutella Facebook page (which the paper says is one of the most followed pages in Germany, though it cites a global number rather than a German one) for whom they could pinpoint location as a proxy for how active the not specifically right-wing, general populations of these towns are on Facebook. Are these good proxies? Critics weren’t so sure. But I’m not sure it’s a totally terrible proxy, either.

This is 100% true, and perhaps the more important take away here. Researcha shouldn’t have to scrape Nutella pages for social data of upmost importance https://t.co/n8e066e2RJ

— hal (@halhod) August 22, 2018

The paper also finds when there were internet and Facebook outages in heavy Facebook-use towns in the time period studied, there were fewer total attacks against refugees. Critics were wary of this, too. Causation? Correlation? And are we sure we know what is causing what, as the Times story is so forcefully arguing?

It’s a narrative that feels right — there’s a lot of hateful shit posted on Facebook, and that avalanche of content eventually whips up very engaged users into a hateful frenzy that pushes them over the edge in real life. The Times story has real anecdotes of people going through this transformation, and others witnessing these transformations in their communities. But it leans on this working paper to neaten the narrative, and reality is anything but neat.

We should probably be careful with just how much coverage we’re centering around fake news. There’s evidence that when people who are perceived to be “elites” — such as politicians, journalists, or certain activists — bring up fake news, that talk itself can subsequently lead to people being unsure about the veracity of real news stories, according to a new study by Emily Van Duyn and Jessica Collier of the University of Texas at Austin.

In the study, participants recruited via Mechanical Turk were first asked to classify the subject matter of tweets that were either about fake news or the federal budget. The tweets weren’t taken from actual Twitter but generated by the researchers, hinted at real organizations like NPR or the Sierra Club, and came from verified accounts with white, male names and avatars. Participants were then asked to read several news articles, some of which were fake, and asked to determine whether they thought the articles were real, fake (or that they weren’t sure which). The results indicated that seeing tweets about fake news had messed with people’s ability to identify real news articles as real, regardless of whether the participant identified as conservative or liberal.

Individuals primed with elite discourse about fake news identified real news with less accuracy than those who were not primed. As expected, political knowledge related to accurate identification of real news, where the more knowledgeable were more accurate than the less knowledgeable. Neither of these findings were true for the identification of fake news. Individuals primed with discourse about fake news were not more accurate in their identification of fake news than those who were not primed, and political knowledge also appeared to have no effect.

“Overall, these results point to a troubling effect,” the researchers conclude. “A similar ability to identify fake news between those in the prime and control conditions means that efforts to call attention to the differences between fake and real news may be instead making the distinction less clear.”

Here’s a theory that WhatsApp could moderate content if it wanted to.WhatsApp has always said end-to-end encryption makes flagging hoaxes on its end impossible. But if they do retain message metadata for some period of time, could they do some content moderation on their end? It would get to the root of the problem faster than many of these noble, WhatsApp-focused fact-checking initiatives popping up around the world.

That’s the approach Himanshu Gupta and Harsh Taneja put forward in a piece for CJR. They write:

[E]ven if WhatsApp can’t actually read the contents of a message, it [may be able to] access the unique cryptographic hash of that message (which it uses to enable instant forwarding), the time the message was sent, and other metadata. It can also potentially determine who sent a particular file to whom. In short, it can track a message’s journey on its platform (and thereby, fake news) and identify the originator of that message.

If WhatsApp can identify a particular a message’s metadata precisely, it can tag that message as “fake news” after appropriate content moderation. It can be argued that WhatsApp can also, with some tweaks to its algorithm, identify the original sender of a fake news image, video, or text and potentially also stop that content from further spreading on its network.

So are they suggesting a chat app get into the business of deleting content? Yep. “While WhatsApp may insist that it does not want to get into content moderation, or that building traceability of fake news messages would undermine end-to-end encryption, we suggest the authorities should ask them to do so going forward, considering the increasing severity of the fake news problem,” they write.

To be clear, WhatsApp didn’t actually confirm any of this metadata stuff to the authors. 👋🏽 WhatsApp, where are you?

By Shan Wang, for NiemanLab