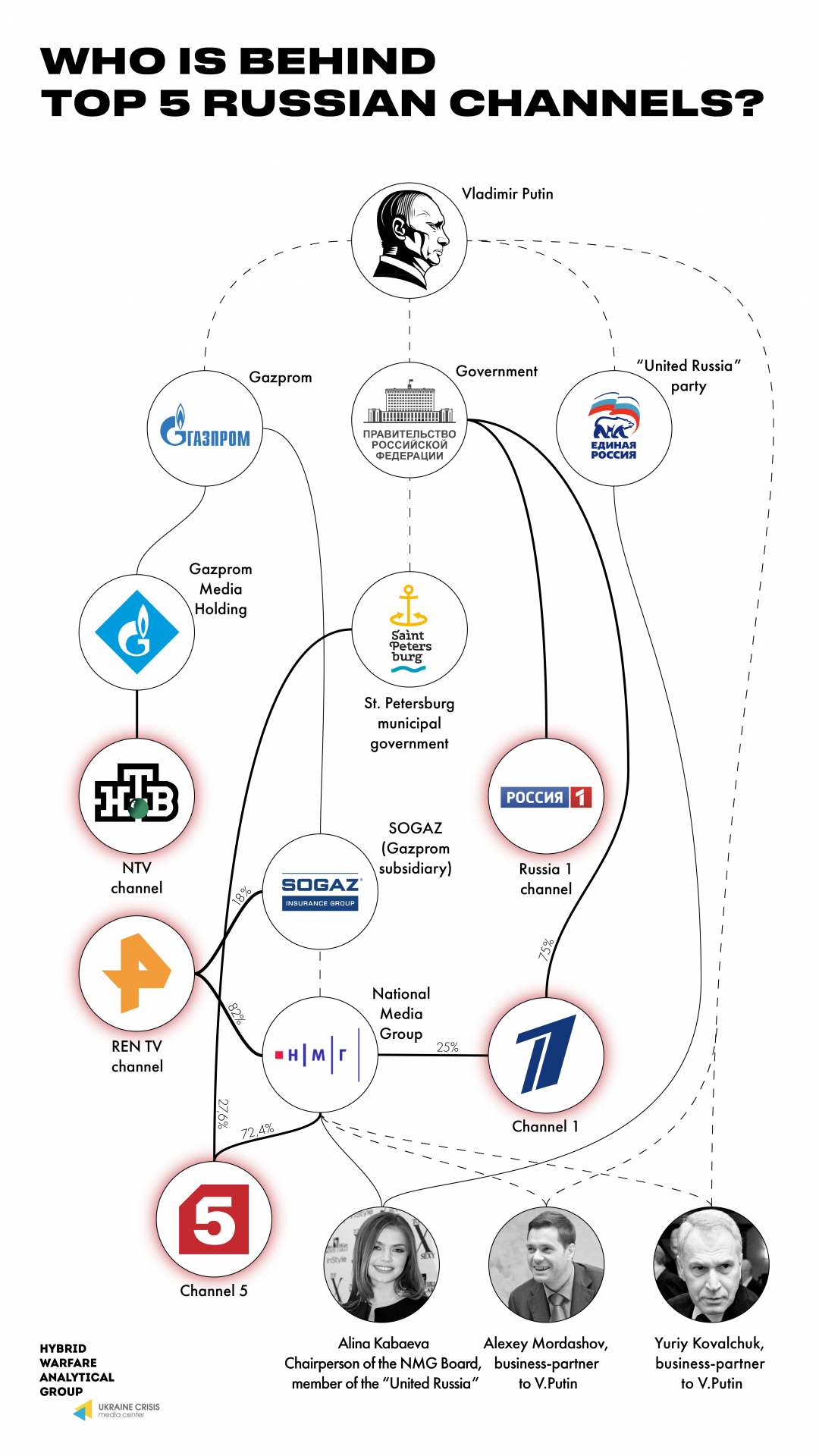

WHO IS BEHIND THE TOP 5 RUSSIAN CHANNELS?

Hybrid Warfare Analytical Group has come up with an infographic covering the subjects behind the top 5 Russian channels: Russia 1 channel, Channel 1, Channel 5, NTV Channel and REN TV channel.

WHAT DO THEY HAVE IN COMMON?

Each of them has either direct or indirect ties to the Russian government, the ruling party United Russia, Gazprom or to people with close relations to president Vladimir Putin and in the end to Putin himself.

Topics of the Week

A dive into the surveillance capabilities of the Kremlin.

What are the early strategies employed to combat disinformation ahead of the 2020 election in the US?

Recommendations for Europe on how to safeguard its interests in the future relationship with China.

Good Old Soviet Joke

It’s August 21st, 1968 in Czechoslovakia. A woman is trying to wake up her husband:

“Wake up! Wake up! We are being occupied!”

“Oh please, the Russians wouldn’t let that happen…”

Policy & Research News

The Kremlin’s surveillance capabilities

A Kremlin-linked research institution has developed sophisticated surveillance systems, as reported by Meduza. The Presidential Affairs Department’s Scientific Research Computing Center is headed by former intelligence officers and has Russia’s intelligence services and military as its main customers.

One of the tools that it developed, MediaMonitor, can track the spreading of news on social media, build clusters of reposts of specific articles on a map of Russia, and determine user IP addresses. A different service, Sherlock, can then be utilized to receive a user’s personal data (passport and tax numbers, property ownership, etc). Yet another system, PSKOV, is capable of building “whole dossiers about entire groups on social networks,” establish relationships between people and search Tor traffic. However, the PSKOV project left the government unimpressed, and the project was suspended due to financing problems. The last tool, Poseidon, is employed by Russian law enforcement to track “extremism” – to monitor protest activity on social media.

Apart from enhancing law enforcement surveillance capabilities, the organization also helps produce intelligence reports for the military. Meduza reports that the Center calculated the impact an accident at one of Ukraine’s power plants would have on the country’s power grid and modelled China’s offensive operation against Russia. The Center is also “in a race against Bellingcat to geolocate” Russian troops in Syria and Donbas when visual evidence indicating their presence becomes public.

Media coverage for manipulated content

Data & Society published a report on source hacking, a media manipulation tactic aimed to obscure the origin of the information. The researchers describe four source hacking techniques and provide examples of their application.

Viral sloganeering is spreading short slogans that present a simplified view of complex and controversial topics through memes, hashtags, videos, flyers, etc. A good example is the “Jobs not Mobs” slogan that went viral after being artificially amplified by anonymous right-wing social media users. When the slogan reached President Trump, news outlets were forced to report on it and thus give it more traction.

Leak forgery is a release of fake incriminating documents to influence a political process. The Macron Leak, a Kremlin-orchestrated distribution of forged documents in combination with authentic ones aimed to influence the media and sway the 2017 French presidential election was unsuccessful only because of timely actions taken by the authorities.

Evidence collage is an easily shared image made up of screenshots and text looking like incriminating evidence. Such collages combine accurate and doctored pieces of information and can gain significant traction during breaking news events, as happened in 2017 when an innocent person was blamed for the Charlottesville car attack.

Keyword squatting is a creation of social media profiles and content associated with political and social movements to “occupy” certain keywords and topics and shape narratives in the future. In 2017, Facebook removed hundreds of accounts and pages associated with Black activism, conservatism, LGBT issues, etc. that were controlled by Russian “troll farm.”

The common denominator of these techniques is disguising authorship to avoid scrutiny and seeking media coverage for amplification. The new terms suggested by the authors can make identification of future influence operations easier and their description more accurate.

US Developments

Early Measures in the 2020 Fight Against Disinformation

A recent article by John Thomas for Medium introduces some of the early strategies employed to combat disinformation ahead of the 2020 election. Thomas opens up by explaining the extensive reach that Russia’s malign Internet Research Agency (IRA) enjoyed during the run-up to the 2016 election, attributed entirely to social media influence. He then warns readers that 2020 could see additional players in the disinformation circuit to include Iran, North Korea or even China.

Fully aware of what lies ahead in a campaign year, the Medium piece touches on a host of tech companies who gathered at Facebook’s headquarters to discuss effective methods to combat various forms of misinformation. Amongst the countless malevolent tactics employed by agencies like the IRA, Thomas cites an interview with Paul Barett of the NYU Stern Center for Business and Human Rights that warns against deepfakes above all. The author poses different timing scenarios in which a well-done deepfake video could drastically impact a vote’s outcome. Besides deepfakes, Thomas mentions Twitter meme success as well as Instagram’s amplified utility as primary sources for misinformation threats ahead of the 2020 election. Finally, when reviewing methods to help control fake news online, Thomas warns that social media companies need to be cautious in maintaining freedom of speech while fighting against malicious disinformation. After all, the last thing U.S. entities want to do while protecting their democracies is to impinge upon their foundational freedoms.

Kremlin Watch Reading Suggestion

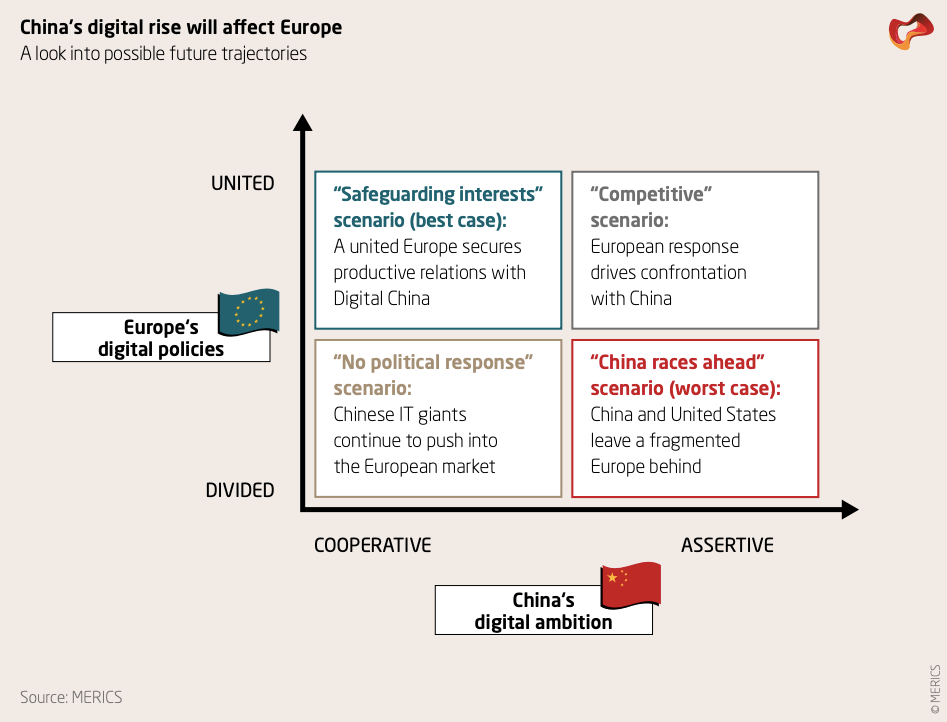

China’s Digital Rise: Challenges for Europe

In part due to the government-led strategies and initiatives, such as the Digital Silkroad or Made in China 2025, Chinese technology companies have emerged as a global leader in new digital technologies. Chinese technology companies are now in the leading position on standardization of blockchain technology, 5G, and the Internet of Things. The Berlin-based Mercator Institute for China Studies (MERICS) issued a report surveying the current landscape of China’s position in emerging technologies and the myriad of challenges it causes for Europe. Their report includes several possible scenarios for Europe and China’s future relationship and sixteen recommendations to safeguard European interests.

MERICS found that China’s digital rise is impacting Europe in three main ways. First, it creates unfair economic competition due to the Chinese government’s involvement in and subsidies for their technology companies. Second, the adoption of Chinese technology into critical digital infrastructure increases security risks, particularly for espionage by the Chinese government. Third, Chinese views on personal privacy and human rights are in direct conflict with EU norms (especially as Europeans living in China are integrated into the Social Credit System, which is currently the plan).

The future of European and Chinese relations in the digital sphere will evolve over time, but several factors will shape this relationship: the political-economic dynamics in China, the degree of European alignment on digital policies, the development of Europe’s local digital industry, and China and Europe’s relationship with the United States. Each of the reports four scenarios deals with these factors. For instance, the ‘best case scenario’ has Beijing increasing market reforms and the EU forming a united framework for the digital single market. While the worst-case finds Europe dependent on Chinese technologies due to a fractured digital policy landscape and a lack of home-grown digital infrastructure.

Kremlin Watch is a strategic program of the European Values Think-Tank, which aims to expose and confront instruments of Russian influence and disinformation operations focused against liberal-democratic system.