By Nuurrianti Jalli, for The Conversation

TikTok is one of the top five social media platforms in the world this year.

In Southeast Asia last year, 198 million people, about 29% of the region’s population, used TikTok. It is not an exaggeration to say the platform has become one of, if not the souk of ideas and opinions for the people in the region.

Like other interested scholars, my research team was also intrigued to look into TikTok. Specifically, we wanted to look at how information, including political misinformation and disinformation, flows on the platform. The distinction between the two forms of false information is that disinformation is intentionally, maliciously misleading.

During our eight months’ research, we found tracking political misinformation and disinformation on TikTok quite challenging. This was despite the fact the platform launched a fact-checking program in 2020 in partnership with independent fact-checking organisations that would “help review and assess the accuracy of content” on the platform.

Under this program, TikTok surfaces potential misinformation to its partners. It may include videos flagged by TikTok users for misinformation, or those related to COVID-19 or other topics “about which the spread of misleading information is common”.

However, we still find difficulties tracking misinformation and disinformation on the platform, such as fact-checking audiovisual content and identifying foreign languages and terms.

Fact-checking audiovisual content

It is difficult to fact-check audiovisual content on TikTok.

To effectively track mis/disinformation, all content should be watched carefully and understood based on local context. To ensure the correct assessment, this required long hours of human observation and video analysis (observing language, nonverbal cues, terms, images, text and captions).

This is why fact-checkers globally rely on public participation to report misleading content, aside from having the human fact-checkers focusing on verifying mainly viral content.

AI technology can help verify some of these posts. However, fact-checking audiovisual content still relies heavily on human assessment for accuracy.

To date, audiovisual content is arguably one of the most challenging formats to fact-check across the world. Other social media platforms face the same challenge.

In our research, we found much of the content monitored contained no verifiable claims. This meant it could not be objectively corroborated, or debunked and tagged as misinformation.

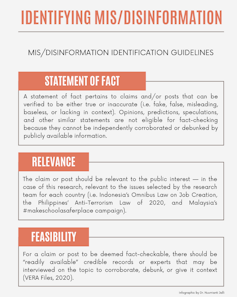

To determine which videos or comments contained inaccurate claims, we developed a misinformation framework based on the criteria for determining verifiable statements used by VERA Files in the Philippines and Tirto.id in Indonesia. Both organisations are signatories of Poynter’s International Fact-Checking Network.

We also considered the 10-point list of red flags and tips in identifying misinformation provided by Colleen Sinclair, an associate professor of clinical psychology at the Mississippi State University.

Using this misinformation framework, we found the majority of the videos and corresponding comments monitored carried mere subjective statements (opinion, calls to action, speculation) or were difficult to verify due to a lack of feasibility.

Examples included comments on Indonesia’s controversial new Labour Law known as the Omnibus Law, debates on the inappropriateness of rape jokes in schools which initiated #MakeSchoolASaferPlace movement in Malaysia, arguments surrounding poor government policies in Malaysia amid COVID-19 which started another online campaign #kerajaangagal, and the Philippines Anti-Terrorism Law. These comments were deemed not verifiable, since they were emotionally driven and based on users’ opinions of the issues. Therefore, they could not be tagged as containing or possibly containing mis/disinformation.

These findings could be different if content creators and video commentators integrated statements of fact or “feasible claims” that we could cross-check with credible and authoritative sources.

Identifying diverse languages, slang and jargon on TikTok**

Some fact-checkers and researchers have previously noted that diverse languages and dialects in the region have made fact-checking difficult for local agencies.

In this study, we likewise found that slang makes it harder to track political mis/disinformation on TikTok even when we analyse content uploaded in our mother tongue.

Factors like generational gaps and lack of awareness of trendy slang and jargon used by content creators and users should not be underestimated in fact-checking content on the platform. Undoubtedly, this will also be an issue for AI-driven fact-checking mechanisms.

Difficult for everyone

During our research, we realised that tracking misinformation on the platform can be a bit more challenging for the research team and common people.

Unless you are a data scientist with the ability to code Python API to collect data, scraping data on TikTok would require manual labour.

For this project, our team opted for the latter, considering most of our members were not equipped with data science skills. We tracked misinformation on the platform by manually mapping out relevant hashtags through TikTok’s search function.

A downside we observed in using this strategy is that it can be time-consuming due to the search function’s limitations.

For one, TikTok’s Discover tab allows users to sort the results according only to relevance and/or total number of likes. They can’t sort results by the total number of views, shares and/or comments.

It also allows one to filter results by date of upload, but only for the last six months. This makes searching for older data, as in our case, difficult.

As such, we had to manually sift through the entries to find relevant videos with the most views or highest number of engagements uploaded within our chosen monitoring period.

This made the process quite overwhelming, especially for the hashtags that yielded thousands (or more) of TikTok videos.

TikTok should be thinking of improving its platform to allow users to filter and sort through videos in search results. Specifically, they should be able to sort by number of views and/or engagements and customised date of upload. Interested individuals and fact-checkers would then be able to track political mis/disinformation more efficiently.

This would help TikTok become less polluted with false information as more people would have the means to monitor mis/disinformation efficiently. That could complement existing efforts by TikTok’s own fact-checking team.

By Nuurrianti Jalli, for The Conversation

Nuurrianti Jalli is Assistant Professor of Communication Studies College of Arts and Sciences Department of Languages, Literature, and Communication Studies, Northern State University.

Disclosure statement: This project is funded by TechCamp, a public policy program initiated by the U.S. Department of State. Meeko Angela Camba of VERA Files, a team member for this project, also contributed her ideas to the development of this article.