Facebook’s report, published last Wednesday, shows how foreign and domestic covert influence operators from 2017 sophisticated their tactics reacting to efforts by social media companies to track down and close fake accounts and counter influence operations.

Facebook reports on more than 150 coordinated inauthentic operations it has identified, reported, and disrupted. “Coordinated Inauthentic Behaviour” (CIB) is defined by Facebook as “any coordinated network of accounts, Pages and Groups on our platforms that centrally relies on fake accounts to mislead Facebook and people using our services about who is behind the operation and what they are doing.”

Source: FB, Threat Report, 2017-2020

Eye of the Hurricane

Why is this report important? Well, Facebook, as one of the very large oline platforms (those with more than 45 million active monthly users in the EU), has a unique position to monitor disinformation and is also uniquely placed to fight it, with report outlining future plans in this direction. So, we payed a strict atttention.

Is 150 a lot?

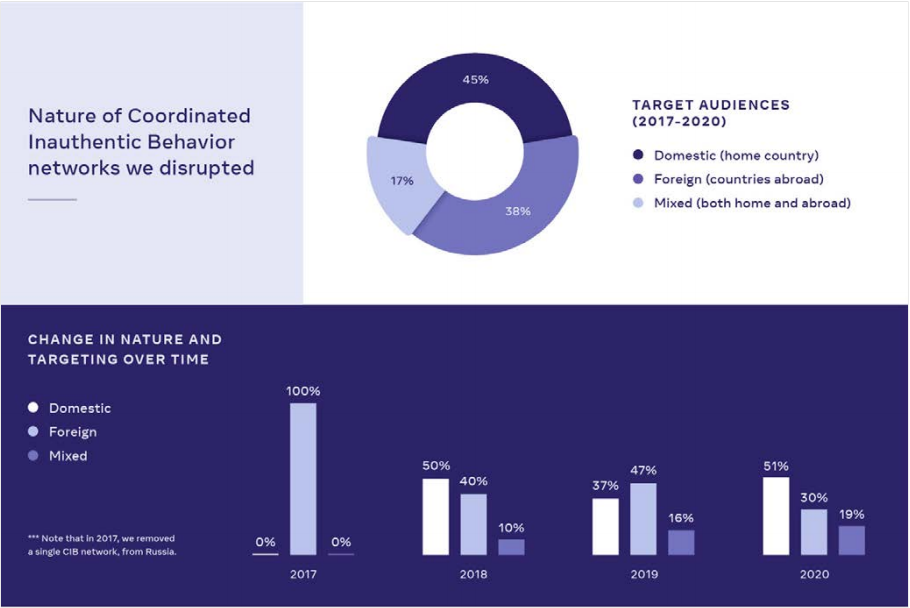

About half of all take-downs Facebook reports on were domestic in nature, a slightly smaller portion focused solely on foreign countries, and the rest targeted audiences both at home and abroad. Despite the fact that public discourse (in the US) shifted from focusing on foreign operations in 2017-2019 to focusing on domestic operations in 2020, Facebook continues to see significant portions of all three types.

Source: FB, Threat Report, 2017-2020

Over 150 networks taken down – is that a lot? This is difficult to say. Those networks that were taken down differ vastly from one another. They have targeted public debate across both established and emerging social media platforms, as well as everything from local blogs to major newspapers and magazines. They were foreign and domestic, run by governments , commercial entities, politicians , and conspiracy and fringe political groups.

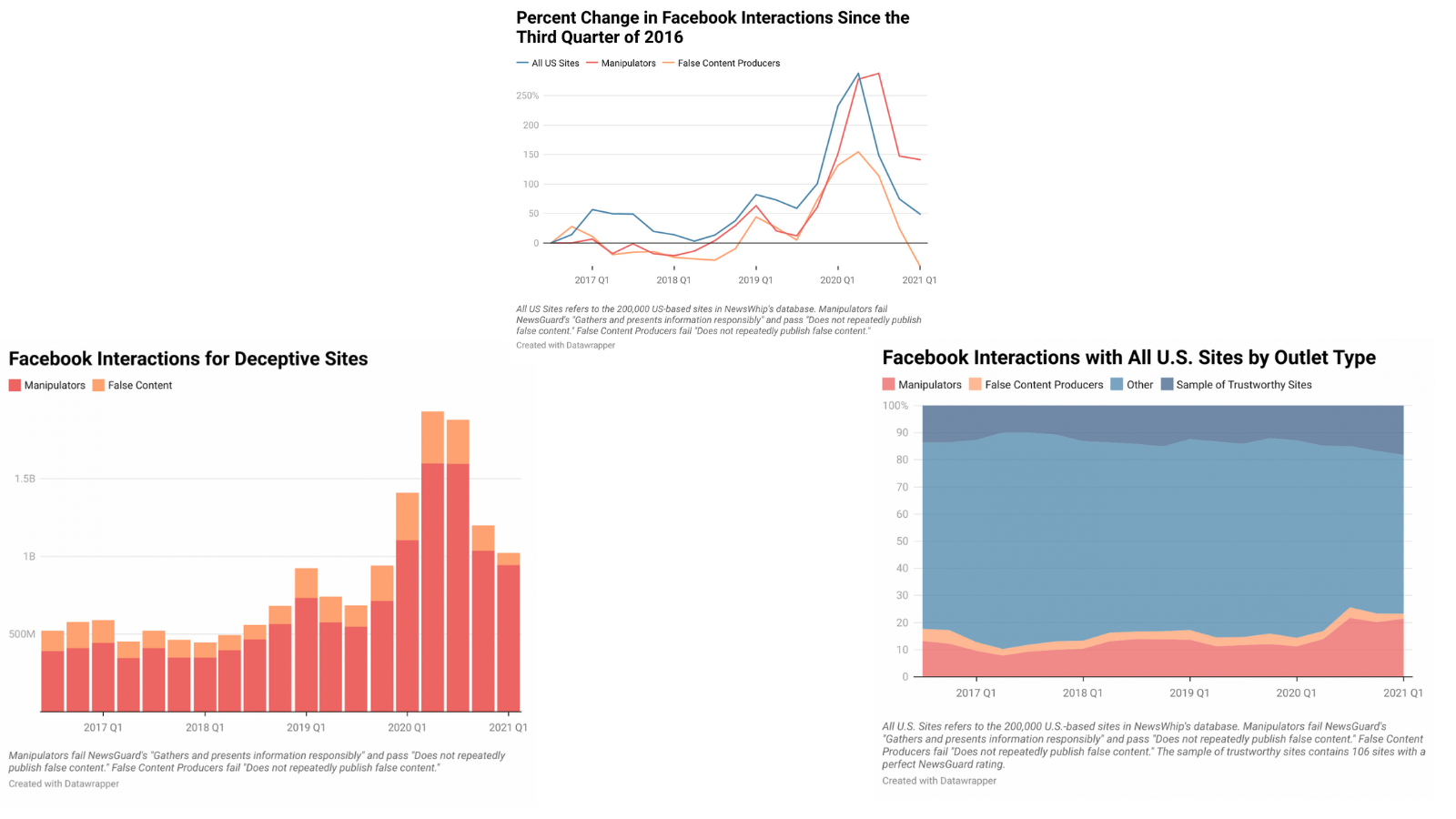

Another indicator of Facebook’s effectiveness, is the latest report of the German Marshal Fund, which came also out this week. According to this report, engagement with deceptive website on Twitter and Facebook dropped in the first three months of 2021 compared to historic highs the year before, according to an analysis released by the GMF’s New Deal initiative. Still, Facebook’s report does not tell us about their activity in the latest quarter. We do not know how the numbers of the two reports relate to each other.

Source: GMF

According to Karen Kornbluh, director of the fund’s digital innovation and democracy initiative, it is clear however that actions the platforms took contributed to the decline:

“This encouraging decrease shows that they do in fact have effective tools to tackle disinformation at the source. We encourage platforms to continue taking proactive measures instead of resorting to ineffective, whack-a-mole efforts against content that has already gone viral,” Kornbluh said in a statement.”

Yet, there might be reason for caution. In April, we wrote that the effectiveness of the takedowns of fake accounts linked to disinformation spreading websites SouthFront and NewsFront was actually limited. After traffic initially fell, it later recovered again. How did they do this? The websites managed to attract visitors anyway, be it through masking the links or elbowing their way through to Wikipedia. What stood out in our research was the fact that in 2020 the number of SouthFront links added to Wikipedia increased by 397%, and most links were added after the takedown (especially in the second half of the year).

In Facebook’s own words:

“one thing remains clear – adversarial threat actors are clever and will stay nimble.”

In our second article on Facebook’s report, we will discuss the trends the company perceives and mitigating strategies.