By Ben Nimmo, AtlanticCouncil’s Digital Forensic Research Lab

On February 20, Russia’s ambassador to the United Nations, Vitaly Churkin, died unexpectedly in New York.

One minute after the news broke on the website of Kremlin broadcaster RT, and a minute before RT managed to tweet the news, a slew of Twitter accounts posted the newsflash with an identical “breaking news” caption.

Most of the accounts had a number of features in common: they were all highly active. They were all vocal supporters of US President Donald Trump. They had avatar pictures of attractive women in revealing outfits.

And they were all fake, set up to steer Twitter users to a money-making ad site.

The network they represent is neither large nor politically influential. It is nonetheless worth analyzing as an example of how commercial concerns can use, and abuse, political groups to drive their traffic.

General behavior

In all, thirty-three accounts tweeted the RT newsflash in the minute between the report being posted online and RT tweeting it. This indicates that they were automated accounts, crawling the RT site with an algorithm to generate their own tweets — since they cannot have picked up the story from RT’s Twitter feed.

Of those, seven were either known pro-Russian amplifier accounts or automated news aggregators whose sole content is posts from various news organizations. Three presented themselves as Trump fan accounts, one as a pro-Trump news outlet. The other twenty-two all purported to be pro-Trump individuals expressing their admiration for the forty-fifth president of the United States:

Нowever, their behavior patterns are consistent with automated “bot” accounts, not genuine users. The account @nataliecoxtrump, for example, was created in January, and had posted 41,200 tweets by February 21, at an average rate of some 800 a day. The account @girl_4_Trump, which began tweeting on January 24 (according to a time search of its posts), had posted 35,600 tweets by February 21, at a rate of 1,227 a day.

Almost all of the accounts, in fact, were created in January or February, and had posted over 15,000 tweets by February 21.This hypertweeting is, on its own, a clear indication of bot activity.

A number of the accounts also appear to have taken their avatar images from other people’s accounts. The most obvious is the account called @deplorabledeep: a reverse image search of its avatar image on Google leads to an identical photo belonging to author Bob Joswick, whose photo appears both on a Twitter account and a publisher’s site:

The account called @_kaitlinOls_ used a photo of actress Kaitlin Olson taken from an online encyclopedia entry about her. The genuine Olson has her own account, which has been verified by Twitter, at @KaitlinOlson.

Other accounts took images from a variety of sources, including Instagram. The most popular appears to have been the avatar of @EmmaMAGA_ (45,500 tweets since it first posted on February 5, or over 2,800 tweets a day):

Reverse searching this image leads to Instagram user Angie Varona, and dozens of different accounts which also use the picture.

Combined with the inhumanly high level of activity, this confirms the presence of a botnet.

Specific behavior

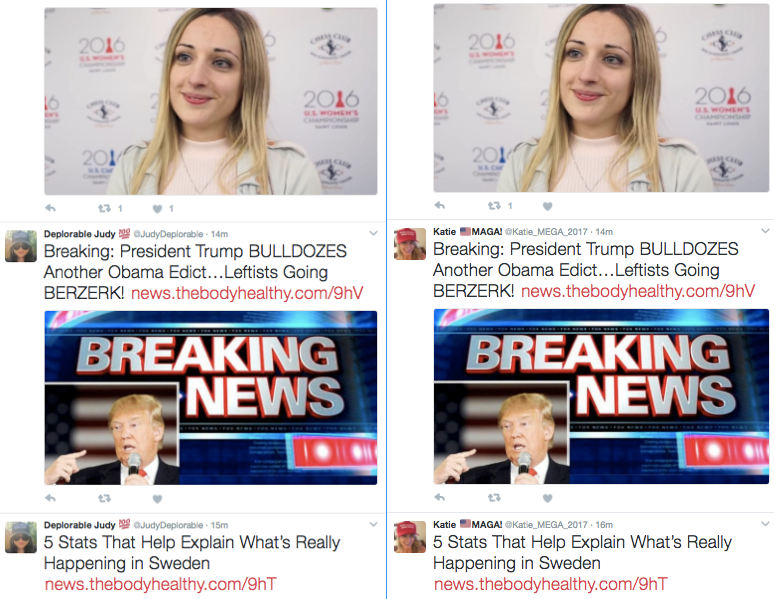

The accounts’ specific behavior confirms this. As of February 21, all their recent tweets were posts of news content from a range of sources including Breitbart, the BBC, RT, Reuters and (bizarrely) local newspaper the Coventry Telegraph in the UK. The great majority of them tweeted the same stories, from the same sources, in the same order.

In every tweet posted by these accounts, the hyperlink presented did not lead directly to a news story, but to an advertising site offering $5 in revenue per 10,000 clicks. Four different shorteners were used: news.thehealthybody.com; news.twittcloud.com; maga.news.twittcloud.com; and newspaper24.twittcloud.com. All led to similar screens with Trump logos and pay-per-click offers:

Posting for profit

The common theme between these accounts is therefore not a political stance, but the desire to generate revenue by attracting clicks.

Confirming that these accounts are the work of a single individual, eleven of them posted, as their pinned tweet, an identical shortened Google URL (goo.gl/1s3Rmr).

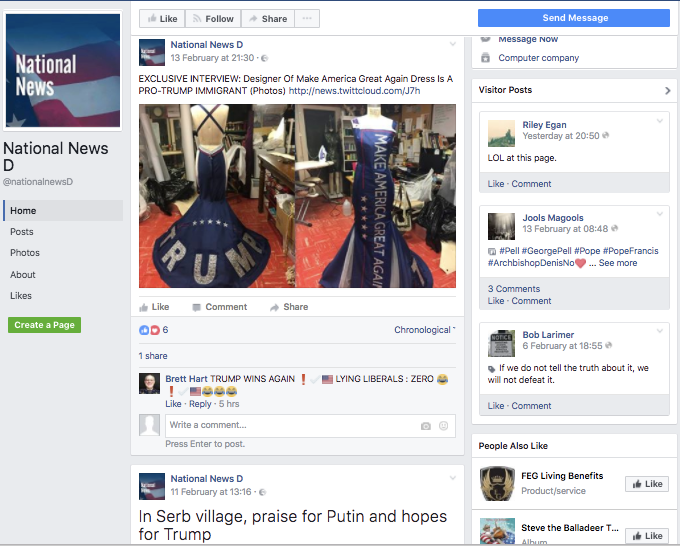

The link leads to a Facebook page called NationalNewsD, which appears to be a news aggregator. It posted relatively little in February; in early January, it was far more active. At that stage, it appeared to focus largely on Australian news, but progressively shifted its focus towards more US news, especially concerning Trump.

However, rather than simply leading the user to news stories, its posts again lead back to the pay-per-click page.

Some posts also contain a second link leading to a twitter account called @judith_forex. This claims to be Australian (albeit in non-native English). However, the account’s behavior is botlike, posting the same stories as the other accounts mentioned above in the same order, and having the same high rate of tweeting and the same pinned tweet with the same Google short URL:

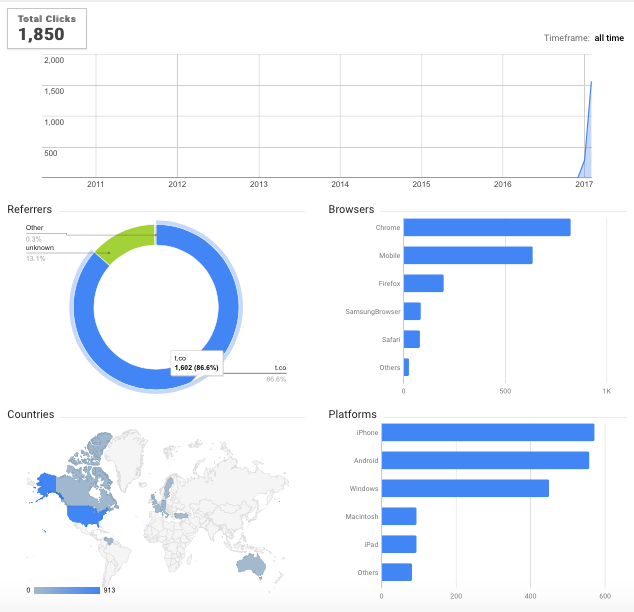

The Google analytics for the shortened link to the Facebook page reveal that it was created on January 22, and had received a modest 1,850 clicks by February 21. Almost 90 percent of the clicks were referred from Twitter, and the great majority came from the United States.

According to the detail of the analytics, 913 clicks came from the US, compared with 94 from Germany, 43 from Canada, 42 from the UK, 13 each from Australia and Venezuela, 9 from France, and 8 each from Sweden and Turkey.

This is a botnet posing as a pro-Trump network, amplifying a range of sources beyond the Kremlin’s RT, and drawing its greatest traffic from users in the US. However, it does not appear to be a political network designed to propagate pro-Trump messaging: the content it posts is too varied, including outlets such as the Guardian and the Coventry Telegraph, neither of which is a hotbed of pro-Trump sentiment.

The likelihood is that this botnet is not political in origin, but purely commercial. By creating a network of fake Trump supporters, it targets genuine ones, drawing them towards pay-per-click sites, and therefore advertising revenue. It is neither large nor especially effective, but it highlights the ways in which fake accounts can mimic real ones in order to make a fast cent.

By Ben Nimmo, AtlanticCouncil’s Digital Forensic Research Lab

Ben Nimmo is Senior Fellow for Information Defense at the Atlantic Council’s Digital Forensic Research Lab (@DFRLab).