By

The growing stream of reporting on and data about fake news, misinformation, partisan content, and news literacy is hard to keep up with. This weekly roundup offers the highlights of what you might have missed.

3,500 Facebook ads. Democrats on the House Intelligence Committee released a trove of 3,500 Facebook ads purchased by Russia during the 2016 U.S. presidential election. Tony Romm in The Washington Post:

In many cases, the Kremlin-tied ads took multiple sides of the same issue. Accounts like United Muslims of America urged viewers in New York in March 2016 to “stop Islamophobia and the fear of Muslims.” That same account, days later, crafted an open letter in another ad that accused Clinton of failing to support Muslims before the election. And other accounts linked to the IRA sought to target Muslims: One ad highlighted by the House Intelligence Committee called President Barack Obama a “traitor” who had acted as a “pawn in the hands of Arabian Sheikhs.”

For two years, Russian agents proffered similar ads around issues like racism and causes like Black Lives Matter. They relied on Facebook features to target specific categories of users. An IRA-backed account on Instagram aimed a January 2016 ad about “white supremacy” specifically to those whose interests included HuffPost’s “black voices” section.

Another interesting thing about the ads: Some were totally benign. Memes about Pokemon Go or Spongebob Squarepants.

Mueller’s indictment of 13 Russians earlier this yr explained why: The accounts worked hard to appear natural, learning US holidays, posting during US awake hours..— Sarah Frier (@sarahfrier) 10 мая 2018 г.

The documents released Thursday show that “Russian agents continued advertising on Facebook well after the presidential election,” Romm writes. This week, Facebook rolled out new “issue ad” rules meant to combat Russian meddling; buyers of ads on contentious topics — a broad list: Abortion, budget, civil rights, crime, economy, education, energy, environment, foreign policy, government reform, guns, health, immigration, infrastructure, military, poverty, social security, taxes, terrorism, and values — will be required to reveal their identity, location, and who’s paying. Facebook has also banned all foreign advertising around Ireland’s upcoming referendum on whether to legalize abortion; Google followed suit.

Familiarity with a fake news headline increases your likelihood of rating it as accurate. Here’s an updated version of Gordon Pennycook and David Rand’s paper on how simple exposure to fake news increases its perceived accuracy a week later; it will be published soon in the Journal of Experimental Psychology. “Using actual fake news headlines presented as they were seen on Facebook, we show that even a single exposure increases subsequent perceptions of accuracy, both within the same session and after a week. Moreover, this ‘illusory truth effect’ for fake news headlines occurs despite a low level of overall believability, and even when the stories are labeled as contested by fact-checkers or are inconsistent with the reader’s political ideology.” A couple more bits from the paper:

— This effect doesn’t exist for statements that are completely implausible, like “The Earth is a perfect square.”

— The effect held even when viewers saw a warning (“Disputed by 3rd party fact-checkers”). It also held among fake (and real) news headlines that were inconsistent with one’s political ideology.

“It is possible to use repetition to increase the perceived accuracy even for entirely fabricated and, frankly, outlandish fake news stories that, given some reflection, people probably know are untrue,” the authors write.

When and why do people bullshit?. A new paper by Wake Forest assistant professor of psychology John Petrocelli looks at the nature of bullshitting, “i.e., communicating with little to no concern for evidence or truth.” It “looks to be a common social activity,” but why do people do it? “The current investigation stands as the first empirical analysis designed to identify the social antecedents of bullshitting behavior….As Pennycook et al. noted, bullshit is distinct from mere nonsense as it implies, but does not contain, adequate meaning or truth.”

In his experiments, Petrocelli found that, probably not surprisingly, “familiarity with the topic under discussion, social expectations to have an opinion, audience topic knowledge, and accountability conditions affect the degree to which self-perceived bullshitting is likely to occur.” People “bullshit frequently” when they think they are likely to get away with it; “bullshitting behavior appears to be most significantly reduced…when people do not feel obligated to provide an opinion and receiving social tolerance for bullshit is not expected to be easy.”

More research is required, but the effects of bullshitting could be far-reaching, Petrocelli suggests:

Future research may do well to examine bullshitting as a frame of mind and its potential connections with interest/openness to additional information, fake news reports, and resistance to persuasion. That is, relative to the mindset of one interested in evidence, a bullshitting mindset should be associated with less interest in additional information regardless of his/her attitude and regardless of the position the additional information is expected to support.

PolitiFact’s AMA Here it is. A couple excerpts:

Partisanship research! Get your partisanship research here! Roundup here by Thomas Edsall.

“I put myself on a weeklong Radio Sputnik-only diet.” PBS NewsHour reporter Elizabeth Flock spent a week getting all of her news from the Russian government–funded radio station Radio Sputnik, sister site Russia Today, and their social media platforms, and found it very disorienting.

Day 1 of the diet was April 10, the first of two days Facebook CEO Mark Zuckerberg spent testifying before Congress about Facebook’s role in sharing user data that may have helped Donald Trump win the presidency. While I saw later that his testimony had dominated headlines, there was no mention of it on Sputnik’s site for most of the day. Instead, Sputnik led with a story about Google’s supposed search manipulation, claiming it had favored Hillary Clinton in the election…

Meanwhile, on the radio, Nixon and Stranahan were busy talking about the Skripals, saying that the British government was not happy the Skripals had woken up after being poisoned. Stranahan called the Skripal poisoning a “false flag” attack by Western government — false flag being a favored conspiracy theory term for a secret operation intended to deceive. Over the next few days, I counted more than a dozen uses of the phrase “false flag” on the outlet. The phrase often served to morph factual news stories into a murky morass.

On Day 2, I turned on breaking news alerts on my phone from Sputnik. I was alerted that the U.S. was “using ‘fabrications and lies’ as excuse to target Syria,” in a story that relied on Syria’s own state news agency. I was also told that “soil examination in Douma didn’t show any poisonous substances,” according to the Russian ministry of defense, even though the Organisation for the Prohibition of Chemical Weapons hadn’t released its findings yet. When I visited Sputnik’s Instagram page, I found mostly dog photos, workout life hacks and a positive video of Putin for International Women’s Day.

Day 3, I turned on 105.5 FM on my way to work, and Nixon and Stranahan were starting to cast their doubts on the validity of the chemical weapons attack in Syria. They recalled the Nayirah testimony of 1990, in which the Kuwaiti ambassador’s daughter gave false testimony of human rights abuses by Iraqi soldiers to bolster support for the Persian Gulf War.

I broke my media diet then to look up the Nayirah testimony. I briefly entertained the possibility that the West could have distorted what happened in Douma to build support for a U.S. strike there. I even found myself researching more theories about what happened to the Skripals. It didn’t matter that later I would see how the facts had been twisted. It was in moments like these, listening to Sputnik, that I could see how you’d begin to believe that everything was possible.

By

This piece is a rapt of a series Real News About Fake News

The growing stream of reporting on and data about fake news, misinformation, partisan content, and news literacy is hard to keep up with. This weekly roundup offers the highlights of what you might have missed.

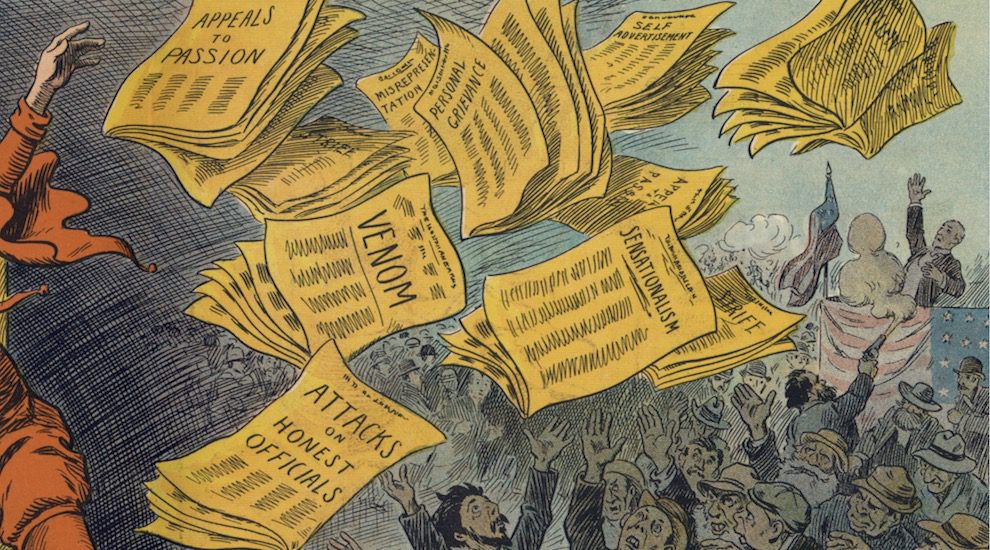

ILLUSTRATION FROM L.M. GLACKENS’ THE YELLOW PRESS (1910) VIA THE PUBLIC DOMAIN REVIEW.