By Nancy Watzman, Katie Donnelly, And Jessica Clark, for NiemanLab

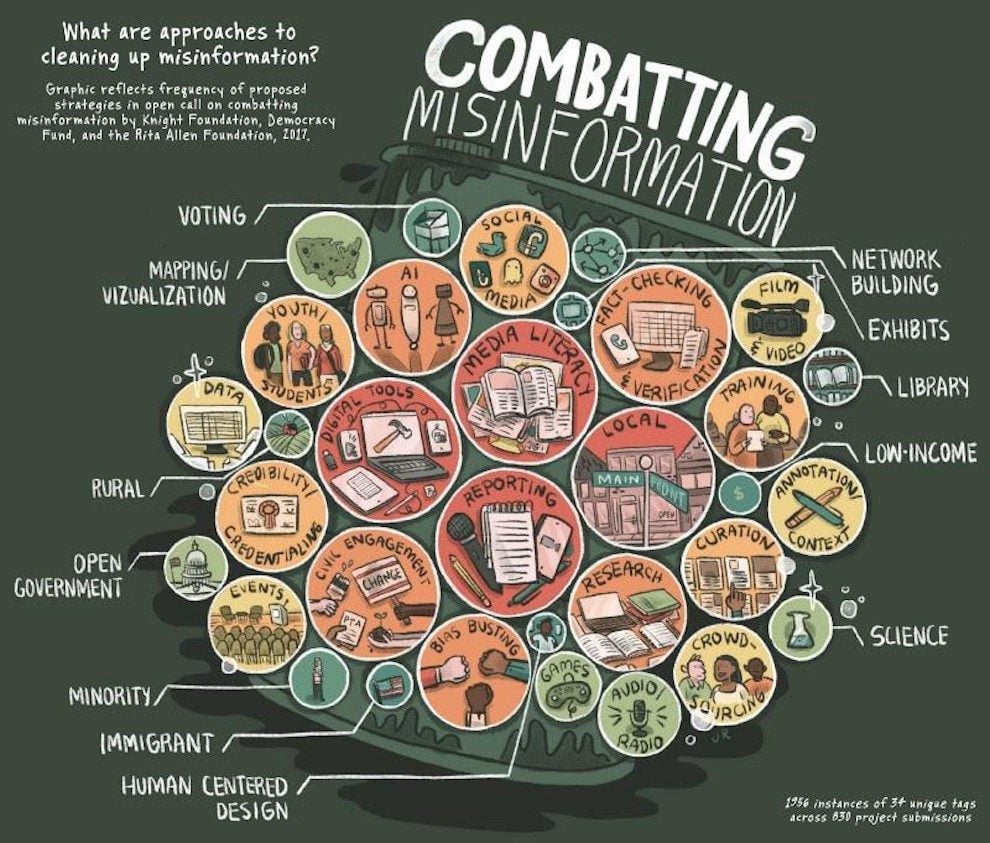

In March 2017, the Knight Foundation, Democracy Fund, and the Rita Allen Foundation hosted a prototype open call for ideas on countering misinformation, which attracted 830 submissions. As described by the Democracy Fund’s Joshua Stearns, “These open calls are a way for foundations to catalyze energy and surface new ideas, bringing new people and sectors together to tackle the complex challenges related to misinformation.”

From the 830 applicants, these foundations awarded 20 projects a total of $1 million. As Stearns notes, this is just one of several new efforts by funder confront the problem of misinformation, including the News Integrity Initiative and the Knight Foundation’s commission on “Trust, Media and Democracy.” Since the call launch, the number of such efforts has continued to grow, including new ones involving social media platforms directly. A philanthropy-funded research collaborative with Facebook recently announced its first request for proposals from researchers. WhatsApp also announced a call for proposals. At the same time, while the public discussion about misinformation is at a high pitch, as Stearns points out, “issues of trust in journalism extend far back into our nation’s history.”

In an effort to learn more about different approaches to fighting mis- and dis-information, Dot Connector Studio (DCS) developed simple tags to code the entire set of proposals by subject and strategy, such as “media literacy,” “AI,” and “mapping/visualization.” (Each project could be categorized with multiple tags.)

While the applications remain confidential, DCS wishes to share these tags with the larger research community in the hope that they may be helpful in ongoing efforts. Creating such taxonomies in emerging fields can also help practitioners and funders to connect with, compare, and refine similar projects. This is done in similar to spirit to First Draft News’ publication of definitions and taxonomies for the study information disorder; the Hewlett Foundation-sponsored review of scientific literature on disinformation; and other efforts to share information on best practices in this sphere.

The tags DCS used changed over time, as we got deeper into the dataset. This process itself yields insights. For example, DCS collapsed more specific tags into the catch-all category of “minority,” because tags such as “African American,” or “Latino,” applied only to a handful of projects. Similarly, DCS struck tags pertaining to specific audiences, such as “conservative,” “disabled,” “elderly/senior,” and “women,” because there were not enough applicants to warrant such tags useful.1 And other distinctions began to emerge that prompted the creation of new tags.2

Here’s the full list of tags.

List of tags and definitions

- AI: Projects that employ artificial intelligence to augment information.

- Annotation/context: Projects that add to existing texts by providing additional notes, background information or contextual information.

- Audio/radio: Projects that use radio or podcasts to deliver information.

- Bias busting: Projects designed to puncture filter bubbles across ideological divides or increase cross-cultural understanding.

- Civic engagement: Projects focused on bringing community members together to discuss issues, connecting them to government representatives.

- Credibility/credentialing: Projects designed to assess, rank, or rate credibility; projects that mark specific information sources as high-quality or trustworthy.

- Crowdsourcing: Projects that collect and employ input from users.

- Curation: Projects that select, organize, and present a set of information sources

- Data: Projects that yield a new set of machine-readable information usable by developers, by data journalists, in visualizations, etc. (For example, an API.)

- Digital tools: Projects that create an app, widget, plugin, or interactive feature designed to provide mobile access or enhance users’ experience on existing digital interfaces.

- Exhibits: Projects that include physical displays of collections of work.

- Events: Projects that involve live community events, such as town halls.

- Fact-checking/verification: Projects that flag and/or correct false information; projects that provide means to confirm that underlying material is authentic (i.e. a way to track whether a photo is faked, a video moment is in proper context, etc.).

- Film/video: Projects that use film, video, or television to deliver information.

- Games: Projects that develop games or employ game design or game theory concepts

- Human-centered design: Projects that involve user experience and cognition in project design.

- Immigrants: Projects geared towards immigrants or focused on the topic of immigration.

- Library: Projects in which libraries played a key role, either as a partner or as a physical space.

- Local: Projects that take place in specific communities.

- Low-income: Projects geared towards low-income people or focused on income inequality.

- Mapping/visualization: Projects that illustrate and showcase data findings using imagery.

- Media literacy: Projects designed to teach individuals how to think critically about media, how media is constructed and how to be discerning about information sources.

- Minority: Projects geared towards minority populations or focused on race or ethnicity.

- N/A: Project applications that are incomplete, incoherent, or make no connection to misinformation.

- Network building: Proposals to strengthen multiple journalism organizations by providing systematic support.

- Open government: Projects that make government data accessible and available.

- Reporting: Projects that center on original writing and reporting.

- Research: Projects, typically from universities, that are designed to investigate hypotheses and uncover new information.

- Rural: Projects geared towards rural populations or intended to address rural/urban divides.

- Science: Projects that focused on improving communication and correcting misinformation in the realm of science (particularly climate science).

- Social media: Projects in which social media (Twitter, Facebook, etc.) is a main focal point or that involve developing new social media platforms.

- Training: Projects that involve teaching a set of skills to a group of people, including professional development on misinformation for journalists and educators, and training citizens on journalism skills.

- Voting: Projects that are designed to make it easier for citizens to register to vote and participate in elections.

- Youth/students: Projects geared specifically towards young people, including college students and young adults.

Nancy Watzman, Katie Donnelly, and Jessica Clark are director of strategic initiatives, associate director, and founder and director, respectively, of Dot Connector Studio. Note: Members of the Dot Connector Studio team have worked on applications for this and previous open calls organized by the Knight Foundation, and Knight has in the past been a funder of Nieman Lab. This piece originally appeared on Medium.

- Among the notable changes:

- DCS pulled out journalism subcategories into their own tags: “training,” “data,” and “local.” “Journalism,” was too broad to be applied effectively, and was changed to “reporting.” (Investigative journalism projects are coded under “reporting.”)

- “Archiving” was removed.

- “AI/bots” changed to “AI.”

- “Media literacy education” changed to “media literacy” to include projects outside of the educational realm.

- “Fact-checking” was combined with “verification” due to overlap among these types of projects.

- “Mapping” changed to “mapping/visualization” due to overlap among these types of projects.

- “Original research” changed to “research” to include applied research as well.

- “Mobile/app” changed to “digital tools” to include browser extensions and widgets as well.

-

- Tags for various types of media/platform, such as “audio/radio,” “film/video,” “games,” “exhibits,” and “events.”

- Tags for common strategies, including “annotation/context,” “bias busting” (which we use to describe projects designed to pierce filter bubbles or increase cross-cultural understanding), “credibility/credentialing,” “network building,” and “open government.”

Finally, DCS observed multiple projects that were located in libraries or focused on science, and therefore added tags “library” and “science.”

By Nancy Watzman, Katie Donnelly, And Jessica Clark, for NiemanLab